Climate finance: Earning trust through consistent reporting: Chapter 4

Bilateral climate finance reporting: A qualitative review

The quantitative evidence in Chapter 3 suggests a wide disparity in the quality of climate finance reporting. This chapter reviews some of the reporting practices across a selection of providers, to better understand the drivers of these inconsistencies. This information has been obtained through a number of key informant interviews with officials and ex-officials who have expertise in their countries’ reporting, OECD and UNFCCC staff, and a supplementary review of relevant documents.

The sample of countries whose officials we interviewed was not representative, reflecting in part the willingness of officials to have conversations about their country’s reporting. However, the conversations provide us with rich information on some of the challenges faced in climate finance reporting, different views on best practices and specialists’ thoughts on what could enhance consistency. This chapter briefly outlines the main features of climate finance reporting from the countries that we interviewed. It discusses some of key issues highlighted during our conversations.

Features of climate finance reporting

Most comes from development budgets

The vast majority of bilateral climate finance comes not from dedicated budgets, but from assessing the climate relevance of projects that come from usual development programming. There are exceptions, for example, the Netherlands has the ‘climate pool’ and the UK has various funds as part of the Department for Energy Security and Net Zero [1] that are exclusively focused on climate objectives (and all of which are counted as climate finance). Such funds are small in comparison to climate finance that originates from development budgets. Therefore, climate finance comes largely from budgets that already have multiple objectives, although of course some individual projects from these budgets do have sole climate objectives. By way of multilateral comparison, most bilateral climate finance comes from funds that more closely resemble those managed by the International Development Association (primarily focused on development but projects are screened for climate focus) than those managed by the Green Climate Fund (established primarily to focus on climate).

This makes sense in so far as the distinction between climate and development finance is blurry at best, and it is legitimate to recognise the potential climate impacts of development projects (and development goals should be considered when designing climate projects). On the other hand, optimising on multiple objectives is hard, and where climate finance comes from dedicated funds there is a reduced chance that projects have been labelled as climate finance despite only cosmetic alterations.

Project originators usually decide initially on what counts as climate finance

Given that projects often feature climate as one objective among many, it places significance on the questions of how the climate focus of projects is determined and who is responsible for doing so. In most countries, the initial decision for whether a project is climate finance (or to what extent) is first taken by project originators (those responsible for writing the business proposal and designing the project). The decision is usually then checked by a quality assurance team, who examine either a selection of projects, or the entire portfolio. The exact nature of this process differs: some countries review the selection of projects independent of the initial decision, but most know the original classification. Most countries we interviewed also engage independent climate experts to assess projects.

For projects that that receive a different classification during the review, there is generally a discussion between the quality assurance team and the project originators, with the latter providing further information on why the initial decision was made. Often, these discussions arise simply because there is insufficient detail in the project description to decide. Countries differ in terms of who has ultimate authority to decide on the marking.

Not all countries follow this format. For example, the US starts by asking all of the departments that engage in external financing to cast a wide net for any financial commitments that may be related to climate. After receiving a list of projects, a centralised team of climate experts goes through each to determine the proportion of commitments that will be counted as climate finance.

Differences in reporting to the UNFCCC and the OECD

In most cases, and especially where the Rio markers are used as the basis of reporting, the tabular data submitted to the UNFCCC comes directly from data compiled for the OECD (the vast majority of projects are from ODA budgets). However, there are exceptions. In the US, USAID is responsible for submitting data to the OECD and the State Department is responsible for submitting data to UNFCCC, and in practice, the approach to identifying which projects will count as climate finance is separate from the (OECD) Rio-marking process.

Independence of quality assurance units

Several officials highlighted that their quality assurance units are located outside of ministries and so were relatively free from political pressure, and this was viewed as being important. Reviewers are statisticians (or consultants) whose job is to assess the correspondence between reporting instructions (usually the Rio marker handbook) and the markers applied to the projects they observed. They have no responsibility for hitting the ambitious climate finance targets announced by politicians, and are not accountable to those who are.

Ad-hoc reviews

In addition to regular quality assurance of the data as it is produced, many countries have agencies produce additional reports on the degree of accuracy of their climate finance reporting, for example, from the Auditor General’s Office in the case of Denmark, or the Independent Commission on Aid Impact in the UK. The OECD also facilitates statistical peer reviews, whereby DAC members examine the aid reporting practices of other countries and provide feedback. These reviews are not exclusively focused on climate but do include an assessment of the use of policy markers (including the Rio markers).

Internal guidelines on marking

Countries differ as to whether they have their own internal documents that aid officials in determining the climate component of projects. Many rely on the OECD marker handbook (discussed later in the chapter), whereas others supplement this with additional guidance, including FAQ documents and examples of typical activities.

The Rio markers: Pros and cons for climate finance reporting

One of the most obvious differences between countries is whether they base their UNFCCC reporting on the Rio markers, or whether they have adopted an alternative approach. The majority of reporters use the markers as the basis for their submissions (18 out of 23 of the respondents to the OECD’s 2022 survey on the Rio markers), [2] which places considerable importance on the guidelines set out by the OECD, and the OECD’s own system of quality assuring data.

Overview of the Rio markers

The marker approach essentially involves checking to see whether a project explicitly mentions a climate objective (the bar for counting as significant) or whether a climate objective is fundamental to the design of a project (which would count as principal). The requirement that an objective must be explicitly stated helps to guard against the inclusion of projects that are merely ‘mainstreaming’ climate considerations (projects that have been amended to reduce a potential harmful impact on the climate, but are not expected to have a positive one).

As implied by article 2.1(c) in the Paris Agreement, all finance should be consistent with the aim of limiting global warming to 1.5°C above pre-industrial levels, but this does not mean that all finance should be counted as climate finance. Some officials told us that project managers responsible for the initial assessment of climate-relevance would assign a significant marker on the basis that a project had been designed with an ‘environment perspective’, but in such cases (where there were no explicit climate objectives) the use of the marker would be challenged.

After agreeing a project’s markers, a fixed coefficient is then generally applied (depending on the combination of significant and principal markers). In all but one case, countries using the markers count 100% of funding to principal-marked projects as climate finance (Switzerland counts 85%). [3] The coefficient on significant-marked project ranges more broadly, and this feature of the markers (discussed below) has received substantial criticism.

Of the countries that do not use the markers, most try to assess a more precise percentage of the project to include as climate finance on a case-by-case basis. This is generally achieved by examining the different components of projects, such as individual activities or expenditure lines. In cases where detailed expenditure is not available, those employing this case-by-case approach would work with whatever other information is available (for example, if a contract within a project has three separate objectives, of which one is related to climate, this would be assigned a coefficient of 33%).

The officials we spoke to in the countries that implement a case-by-case approach held a strong view that it was a better method, given that it tries to establish a number using a bottom-up approach based on the individual features of projects. The Rio markers were never intended to be quantitative, and are arguably too crude to measure co-benefits accurately. Outside commentators share this view, for example, the ONE campaign describes the countries employing a case-by-case approach as “getting it right”. [4]

At the same time, proponents also acknowledge that there are complications. In particular there is a much greater resource burden in trying to assess a separate coefficient for each project. One official also voiced the concern that the observed trend of an increasing share of climate finance comes from projects with small climate finance shares, indicating that much of this increase could come from small alterations to existing projects (something also noted of the World Bank). [5] While this can also be true under the marker system, the need to count a minimum, fixed percentage may deter countries from counting projects with only marginal alterations. [6] Furthermore, while the marker system is crude, it does benefit from another layer of quality assurance conducted by the OECD, which may have promoted consistency among countries using the markers.

Box 1:

The role of the OECD

Through the Paris Agreement, developed countries are required to report the external climate finance they provide as part of the Enhanced Transparency Framework. Given that this requirement is under the Paris Agreement, the OECD has no formal role in monitoring the provision of climate finance or ensuring it is consistent. Nevertheless, the OECD has adopted a key role in the measurement of climate finance for three reasons:

First, there is the obvious overlap between members of the OECD and the developed countries that are required to provide climate finance as part of the Paris Agreement. Historically, when the Framework Convention was entered into force, the list of ’developed’ countries was based on OECD membership, and most of the projects that get reported are also ODA/other official flow projects, and therefore within the mandate of the OECD to track.

Second, given that the Paris Agreement did not specify how climate finance should be measured, or provide any definition suitable for practical measurement, countries had no official guidance on which to base their reporting. The existence of the Rio markers – introduced by the DAC to track spending related to the Rio Convention – filled this vacuum and became the most common way of tracking bilateral finance that was relevant to the Paris Agreement. In fact, it was only agreed that an adaptation marker would be introduced in 2009 – the same year as the US$100 billion goal was agreed – and the marker was developed in conjunction with UNFCCC counterparts. Therefore, while the markers are in no sense underpinned by any UNFCCC agreement, they have nevertheless assumed significance in climate finance reporting.

Finally, developed countries requested that the OECD track progress towards the US$100 billion goal in 2015, and it has done so since. [7]

Benefits of the Rio markers: OECD efforts to harmonise and quality-assure data

The main benefit of the marker system as it stands is that the OECD has taken an active role in monitoring the implementation of the markers. This has added a much greater degree of quality assurance than there otherwise would have been and has likely promoted consistency in reporting (see Figure 4.2), although significant problems remain. According to the OECD, even countries that do not use the markers to report to UNFCCC are nevertheless concerned about the accuracy of the markers, given the political attention they receive.

The basis of the system is the Rio marker handbook, [8] which – aside from definitions for the markers – contains an indicative table detailing suggested Rio markings for projects focusing on different sectors, along with numerous worked examples applying the markers to specific projects. In addition, the OECD Secretariat frequently holds in-depth briefings and workshops with officials who are involved with the correct application of the markers, and facilitate the voluntary statistical peer reviews in which the markers also feature. For some countries, the OECD even goes through projects line by line to assess the quality of reporting.

While the OECD provides a substantial additional layer of quality assurance, it does not have authority to ‘correct’ projects that are not marked appropriately. They can point out structural inconsistencies (although these line-by-line assessments are not public), but it is then up to the countries whether or not they make changes. And even if the OECD did have the authority to make changes, this does not necessarily have a bearing on how things are reported to UNFCCC. Similarly, the statistical paper reviews provide useful feedback on reporting issues, including with the markers, but countries must voluntarily enter into these reviews (whether as reviewers or reviewees) and there could naturally be a selection bias, as the countries that care least about the accuracy of reporting are less likely to engage in the process.

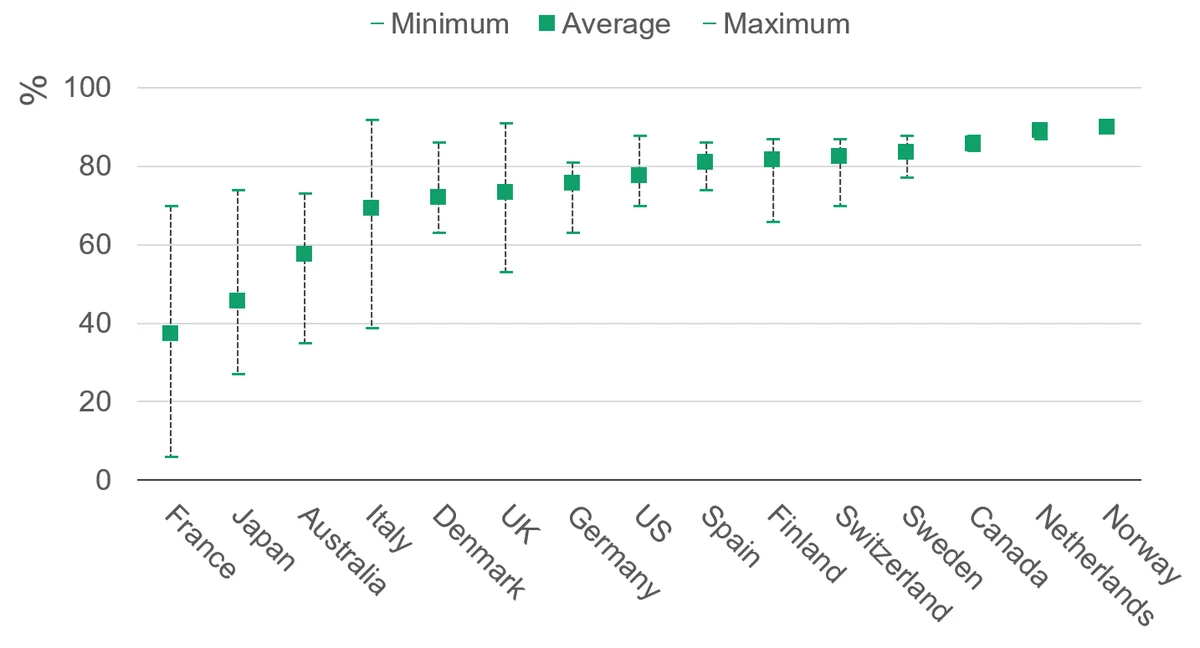

We assessed the importance of this additional assurance by examining adherence to the OECD’s suggested markings in the handbook’s indicative table. We performed this exercise for the 15 largest providers of climate finance (according to UNFCCC data). [9] Figure 4.1 shows the degree of correspondence between the indicative table and countries’ markings, as measured by the percentage of projects that had the same marker as suggested by the purpose code (with half marks given when the marker was the second suggestion, where applicable). This shows that, generally, there is a high degree of consistency between most countries’ markings and the indicative table, but with two major exceptions: France and Japan.

Figure 4.1: Most countries show reasonable adherence to the OECD guidance on Rio markers

Degree of correspondence between mitigation-marked projects and the OECD’s suggested markings, 2013–2022

placeholder

Source: Development Initiatives’ analysis of OECD CRS data and OECD DAC Rio markers for Climate Handbook.

Notes: The results are very similar for the adaptation marker, but the ranges are greater, potentially indicating the higher degree of crossover between adaptation and development goals.

Of these 15 countries (covering around 97% of climate-marked ODA), eleven had an average degree of consistency of over 70% over this period, suggesting that the markers they applied largely follow the suggestions contained within the indicative table. However, two of the four largest providers – Japan and France – deviated significantly, suggesting their reporting is out of sync with OECD guidance and other providers. Australia’s correspondence was also significantly lower than average.

The OECD handbook table is merely indicative and there can be sensible reasons for divergence. For example, one French loan was in the ‘government and civil society’ sector (with a recommended mitigation marker of 0) but was provided to support Georgia in restructuring its energy sector to make it more efficient, [10] so justifiably given a principal marker. However, other French loans were for ‘health systems strengthening’ [11] or ‘financing of the “management for citizenship” programmes’, [12] with no apparent reason as to why they had received a significant mitigation marker. In the case of Japan, over 70% of the projects with a higher mitigation marker than recommended are in the non-renewable energy sector.

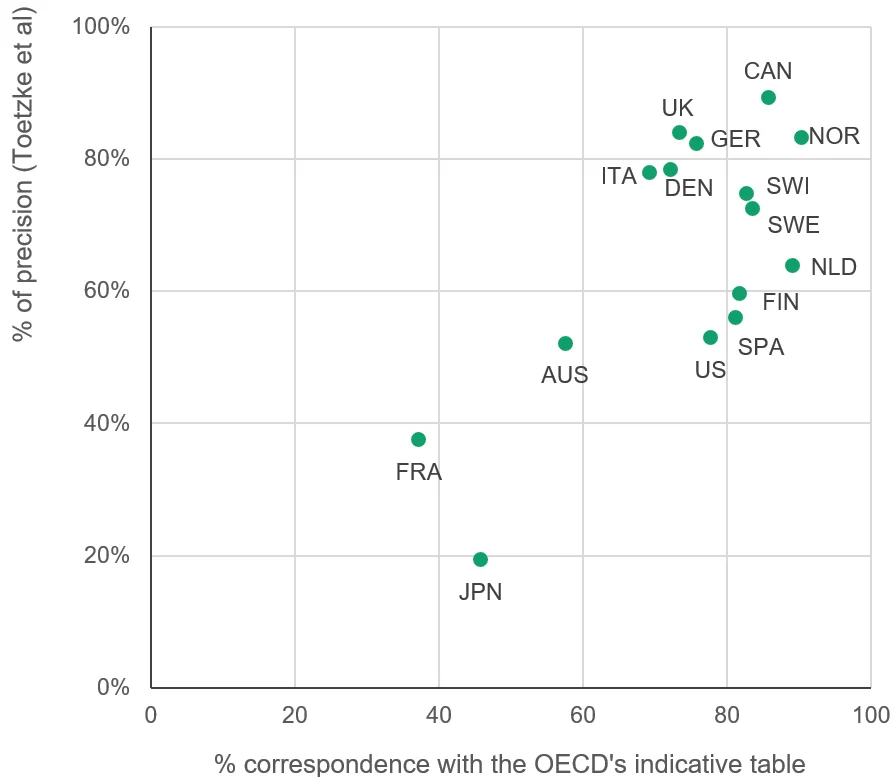

In addition, the degree of correspondence closely correlates with other measures of accuracy. The two biggest outliers (France and Japan) were also judged as having far less reliable climate finance reporting by Toetzke et al (2022), who used a machine learning (specifically, natural language processing) model to investigate the climate relevance of countries’ reporting ( see Chapter 3 ).

Figure 4.2: Strong correlation between adherence to recommended markings and quality of reporting (according to machine learning model) – with France and Japan outliers on both measures

Precision from Toetzke et al (2022) against average correspondence with OECD indicative table between 2013 and 2022

placeholder

Source: Development Initiatives’ analysis of OECD CRS data and the OECD DAC Rio Markers for Climate Handbook, Toetzke et a (2022)

Note: This chart combines data from Figures 3.5 and 4.1.

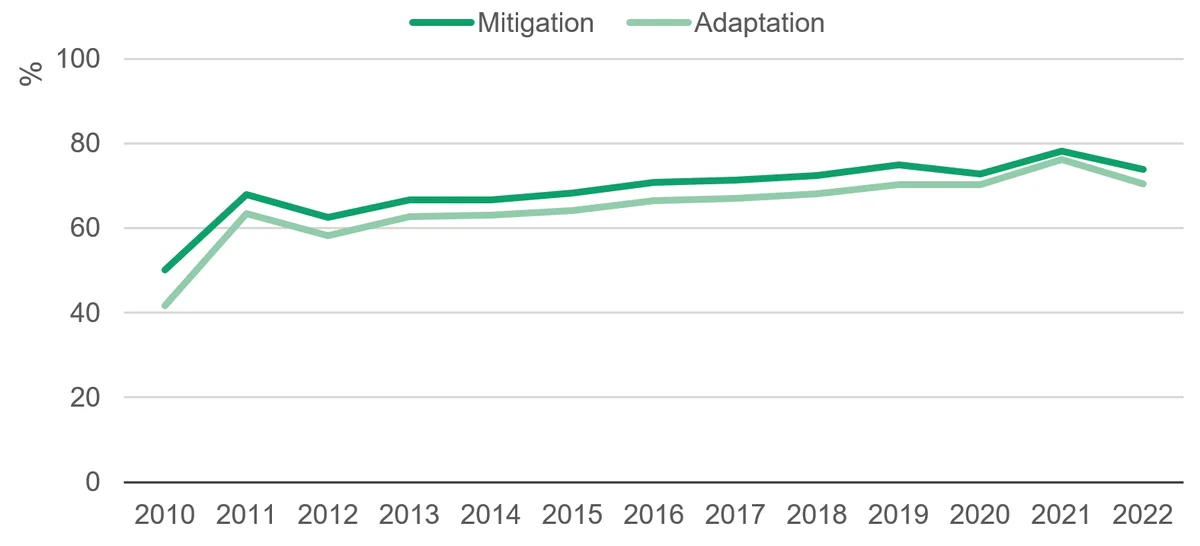

There has been an upwards trend in correspondence over time. In 2010, the average correspondence between recommended and actual mitigation markers was 50%, and 42% for adaptation. By 2022, this had risen to 74% and 70%. A lot of this increase occurred in 2011 (when correspondence was 68% for mitigation and 63% for adaptation) and therefore may simply reflect the fact that the markers were being newly repurposed for tracking progress towards climate finance goals. [13] Nevertheless, there have been increases since, suggesting that the efforts made by the OECD to promote consistency are having an impact. For the two countries least consistent with the handbook – France and Japan – this trend has been less apparent, although Japan’s correspondence with OECD markings increased sharply in 2022 (from around 43% in 2021 to 74% for mitigation, and from 45% to 74% for adaptation).

Drawbacks of the Rio markers: subjective and imprecise even with good faith implementation

Despite the additional layer of review provided by the OECD, the implementation of the markers remains controversial. Even if the OECD did have the authority to amend markings that do not adequately reflect a project’s focus, the lack of granularity means that the amount of climate finance recorded on individual projects is possibly inaccurate, and leaves the door open for exaggeration.

The main concern with the marker approach is that by counting a fixed share of project funding as climate finance, the total could be overstated, as the share of many projects counted may be too high compared to the actual climate investment associated with the project. This concern has been echoed in numerous reports and UNFCCC submissions, such as the Oxfam shadow report, [14] and submissions to the SCF on climate definitions. [15] In principle, this should not be a problem if the coefficients on the markers are set appropriately: i.e. so long as the coefficient is set according to the average relevance of projects with a particular marker, total climate finance will not be overstated, even if measured climate finance is wrong at the project level.

However, this is obviously difficult to judge, likely to change over time, and the process for setting the coefficients does not suggest that they have been well-calibrated to reflect the average relevance of projects. Common responses as to why coefficients have been set at their current level were either that they “were a reasonable approximation” or “in line with other donors”. This is understandable given the complexity of adequately calibrating the coefficients, but it does not suggest that the markers capture climate finance accurately.

In addition, there are reasons to believe either that the average climate relevance of projects has increased over time (as climate becomes a bigger priority, and more and more projects incorporate a greater share of climate objectives) or that it has decreased (as the political pressure to meet climate finance targets leads to looser interpretation of what counts as an objective). DI has found evidence for the latter, [16] as have other authors, [17] but the net impact is still ambiguous. This makes it likely that the appropriate coefficients to use also change over time, but countries very rarely change the coefficients used in reporting.

Evidence from other audits on quality of markers is concerning

Evidence from various reviews of how markers are used is not encouraging, even in countries that appear to be making an effort to apply them rigorously and in good faith. For example, the Danish Auditor General’s Office reviewed the quality assurance reports of Denmark’s climate aid between 2016 and 2018. [18] The reviewers came to a different conclusion about the correct marker to use in more than half of project commitments over this period. This includes projects that should have been marked as climate, and vice versa – and even disagreements about whether the main focus was adaptation or mitigation. However, the review also found that the Denmark’s method was in accordance with OECD’s guidance. The issue was not adherence to the rules, but that the rules themselves were leaving too much room for discretion.

Similar concerns were raised by the European Court of Auditors, who produced a special report entitled ‘Climate spending in 2014–2020 EU budget – not as high as reported’. [19] This report was not focused on international climate finance, but the assessment methodology was taken from the OECD marker system. Among the findings were that “similar projects received different coefficients”, “coefficients are attributed at different levels of detail” [20] and that “the climate coefficients used for nine of the 24 Horizon 2020 projects we examined were not reasonable, as they had a weaker link to climate action than claimed”. [21]

The Policy and Operations Evaluation Department (IOB) of the Netherlands reached a similar conclusion, [22] noting that the marker system “is imperfect and leaves a lot of room for interpretation by individual desk officers”, and that “for activities marked as ‘significant’, it is often harder to see to what extent climate impact was successfully integrated into the project”. [23]

These reviews share common features, highlighting the subjectivity required in the assessment of the markers, and the tendency for them to overstate the totals, echoing concerns raised by civil society. These are far from the only reviews to find that the marker system fails to capture climate finance adequately, but they are notable in coming from the provider governments themselves, and in the case of the Danish audit office, the criticisms were coupled with the finding that the rules had been fully adhered to. The system was the problem, not the implementation.

No consensus that markers are inferior

Despite these criticisms, not all officials agreed that a case-by-case approach was superior. One practical reason raised by several officials was that such an approach is more resource intensive (one official told us it made them feel “tired” just thinking about the additional effort required). Quality assurers – where they exist – cannot just confirm that a project has a climate objective, but have to balance this objective against all the others to determine its relative importance.

In addition, if countries are not required to report the share of projects that are counted as climate finance, and there is no way of discerning this from available data, then there is a serious transparency problem. At times, this can facilitate greater overstatement of climate finance. For example, the French loan to Tanzania for ‘Phase 5 of the Bus Rapid Transit (BRT) of Dar es Salaam’ [24] has a significant marker, indicating that climate is not the main objective, but nevertheless the loan appears to have been counted in full in UNFCCC reporting.

More fundamentally, another reason is that if markers are applied at a sufficiently granular level, the difference becomes less important. For example, if a project consists of multiple components, some of which are focused on climate, then a single fixed coefficient is unlikely to accurately reflect the balance of climate and other objectives. However, if the marker is applied at the component level (rather than for the overall project), then this may be tantamount to a case-by-case approach. For example, the UK reports climate-finance shares of projects that range from near zero to 100%. But these coefficients are determined by evaluating the climate-relevance of individual project components. The UK project “Support to Bangladesh’s National Urban Poverty Reduction Programme” [25] has five separate elements, two of which count in full as climate finance [26] when reporting to UNFCCC, and the rest of which count as zero. So, while at the project level 48% of the project funding was counted as climate finance in 2022, this was the full expenditure on the two climate components; the classification at the component level was binary.

One official told us that when budgeting for climate expenditure, the climate share of whole programmes is estimated based on the planned activities, but that when it comes to reporting to the UNFCCC, the markers are applied to individual disbursements. In practice this is similar to ‘case-by-case’ approaches adopted by some countries.

Not all climate objectives are created equal

The requirement from the marker handbook that objectives need to be explicitly stated has been an important criterion for quality assurance units. Officials told us that they have questioned the marking of multiple projects based on this criterion not being satisfied, especially in contexts where climate objectives are being ‘mainstreamed’ into all projects. In these cases, project managers may feel that all projects have some relevance to climate, even if there is no specific objective/indicator stated in the documentation; quality assurers would push back in these instances.

However, merely stating an objective is not sufficient to guarantee that a project will have a noticeable impact on it, and whether or not an objective is fundamental to the project’s design is a question of motivation that is hard for others to assess. Every project is likely to have some impact on a range of climate goals, some positive, but merely listing the potential positive impacts as objectives should not be sufficient for a project to count as climate finance.

What is a fundamental objective?

When a country applies the principal marker, it is claiming that climate objectives are fundamental to the design of the project, and that the project would not have happened otherwise. These claims are often doubtful. For example, South Korea claimed that a US$100 million loan to Colombia with the description “seeks to support the economic growth of the country, in a context of health emergency due to Covid-19” had a principal mitigation focus. The objectives listed in the description were highly relevant for mitigation, but the fact that the purpose given was “Covid-19 control” casts doubt on the claim that these objectives were fundamental.

Another example is rail transport. In 2022, nearly 40% of principal-marked mitigation ODA disbursements were to the rail transport sector, indicating that these projects would not have happened if not for their climate objective. But transport infrastructure is clearly a developmental necessity that would be required regardless of whether there was a climate crisis: copious academic evidence [27] finds strong links between infrastructure and development outcomes, and indeed many countries have been investing in rail transport for years without discussing its climate impacts.

Often countries disagree on whether an objective is fundamental even for the same programme. Looking at core funding for NGOs or public–private partnerships shows, for example, Sweden’s core contributions to the International Union for the Conservation of Nature were given a principal adaptation marker during 2013–2016. Over that same period, Denmark gave the same activity a significant marker, and Japan gave a zero marker (indicating no adaptation focus). Countries may have had different motives for providing this funding, but given these are core contributions, they were all funding the same thing. Similarly, during 2020, many countries provided the Coalition for Epidemic Preparedness Innovations core funding to help them develop a Covid-19 vaccine, but only Japan counted this funding as having a significant adaptation focus.

Many of the Technical Expert Dialogue discussions that are informing the development of the NCQG highlight that the quality of climate finance is as important as its quantity. The fact that the estimated impact of climate finance varies widely by projects highlights this view, and suggests that greater reporting on expected impact should be essential, as otherwise it is difficult to substantiate claims about climate objectives being fundamental.

Climate finance reporting systems: Good intentions, bad rules

The officials that we interviewed were all concerned with the good faith implementation of the system their country was operating, and multiple levels of reviews provided some guard against excessive marking by project originators.

However, the most common system of reporting – the Rio markers – is clearly not ensuring that climate finance is measured consistently across time or across providers. Every review of the marker system has found disagreements about the correct markings of projects and highlighted the degree of subjectivity involved in assessments. More or less conservative assessments of the climate share of project funding could explain much of the variation in climate finance provision across countries, and there is evidence that changing reporting standards across time explains much of the increase in climate finance.

Nor is the system adequate for assessing how well we are addressing the needs of partner countries. This is not just because of the contentiousness of some of the marking – there is no requirement that climate finance is linked to any measure of need and there are wild differences in the ex-ante estimates of projects' impacts on climate goals.

Trying to assess the specific climate share of projects, as some countries currently do, holds more promise, but is complicated by a number of factors. Many countries do not currently have the resources to implement such an approach, and without the same level of statistical infrastructure that exists for the marker system, there is no guarantee that countries will measure climate finance more consistently or accurately if they move away from the markers. In fact, Japan previously claimed to use a case-by-case approach, [28] but in practice counted all its climate finance loans at 100% (whether marked significant or principal). In addition, while the OECD has no official role in assessing climate finance, its oversight of the marker process has led to additional scrutiny of how countries produce figures, which would be lost if countries moved away from the markers.

Finally, moving towards a case-by-case approach is not a panacea. There is clearly still room for interpretation, as highlighted by the UK’s recent decision to ‘scrub’ existing activities to find additional activities that could be included. As discussed in Chapter 3 , whether changing classifications after the fact makes climate finance estimates more or less accurate is ambiguous, but either the classifications were wrong then, or they are now, and either is problematic for interpreting trends. Similarly, the World Bank assigns different coefficients to each project, but the relevance of many projects has still been questioned. [29]

The next and final chapter discusses some recommendations, drawn from our conversations with specialists, that would promote greater climate finance consistency.

Notes

-

1

This department was created in 2023, when the Department of Business, Energy & Industrial Strategy split to form the Department for Business and Trade, the Department for Energy Security and Net Zero and the Department for Science, Innovation and Technology.Return to source text

-

2

OECD DAC, 2022. DAC Working Party on Development Finance Statistics: Results of the Survey on the Coefficients applied to 2019-20 Rio Marker Data when Reporting to the UN Environmental Conventions. Available at: https://one.oecd.org/document/DCD/DAC/STAT(2022)24/En/pdf#page=7Return to source text

-

3

OECD DAC, 2022. DAC Working Party on Development Finance Statistics: Results of the Survey on the Coefficients applied to 2019-20 Rio Marker Data when Reporting to the UN Environmental Conventions. Available at: https://one.oecd.org/document/DCD/DAC/STAT(2022)24/En/pdf#page=7Return to source text

-

4

ONE, 2023. Data commons, the Climate Finance Files. Available at: https://datacommons.one.org/climate-finance-filesReturn to source text

-

5

The Breakthrough Institute, 2023. What Counts as Climate Finance? Available at: https://thebreakthrough.org/issues/energy/what-counts-as-climateReturn to source text