Is the SDG monitoring framework broken?

As the 2023 World Data Forum gathers in Hangzhou, DI discusses challenges within the existing SDG monitoring system and proposes constructive reform.

DownloadsIntroduction: Is there a problem?

The Millennium Development Goals (MDGs) came to an end in 2015. In 2012, a task team was established to plan for a successor. It concluded that to accomplish the necessary transformative change, consideration should be given to a longer time horizon for the post-2015 agenda, “possibly from 2015 to anywhere between 2030 and 2050”. [1]

Between January 2014 and June 2015, a large-scale consultation was held involving development experts, statisticians, academics, global bureaucrats and government officials, and nearly 500 groups including governments, multilaterals, think tanks, and civil society organisations (CSOs). Its aim was to determine how a comprehensive indicator framework might be established to support the new goals. It proposed the adoption of 100 global indicators accompanied by complementary national indicators. [2]

The scientists and statisticians involved in these consultations called for a realistic approach: 100 indicators to be met by 2050. The diplomats disagreed. In 2016, the Sustainable Development Goals (SDGs) were launched with more than double the number of recommended indicators to be met within half the time.

In March 2023, the UN Economic and Social Commission for Asia and the Pacific published its annual SDG progress report. [3] It warned that the region requires, at the current rate of progress, a further 42 years to meet the SDGs. It also revealed that there remains insufficient data to monitor 51 of the 169 targets.

This discussion paper focuses on the SDG global monitoring system from a data perspective. It attempts to answer the question: Is the SDG monitoring framework broken?

► Read more from DI about data use

► Share your thoughts with us on Twitter or LinkedIn

► Sign up to our newsletter

The SDGs are the flagship development agenda of the United Nations and were adopted by all 193 UN member states in September 2015. [4] They replaced the Millennium Development Goals (MDGs; 2000–2015) and will draw to a close at the end of 2030. [5]

“The SDGs are a call for action by all countries – poor, rich and middle-income – to promote prosperity while protecting the planet. They recognize that ending poverty must go hand-in-hand with strategies that build economic growth and address a range of social needs including education, health, social protection, and job opportunities, while tackling climate change and environmental protection."

The United Nations, 17 Goals to Transform our World. Available at: https://www.un.org/sustainabledevelopment/

Leave no one behind is the central, transformative promise of the SDGs. It represents the unequivocal commitment of all UN member states to eradicate poverty in all its forms, to end discrimination and exclusion, and to reduce the inequalities and vulnerabilities that undermine the potential of individuals and of humanity as a whole.

The global indicator framework to monitor the SDGs was finalised in July 2017. It was designed by the Inter-agency and Expert Group on SDG Indicators (IAEG-SDGs, hereafter referred to as the IAEG), [6] who are also responsible for its ongoing implementation and for making updates and revisions to it. [7] The SDG Global Database is where the monitoring data for the SDGs is stored. [8] It is hosted and maintained by the United Nations Statistics Division (UNSD). [9]

In this discussion paper, we present well-known issues with the SDG monitoring system alongside less widely discussed problems, with the goal of consolidating a discourse that stakeholders can act upon. It is the third in a series of papers by Development Initiatives (DI) that explore the dynamics between national data ecosystems , donor interventions and data-consuming international institutions.

Download the full discussion paper and read the others in this series:

What did we learn from the MDGs?

When the UN set about developing the monitoring system for the SDGs it aimed to avoid the pitfalls of the MDG monitoring system. Right at the start of the process, the UN System Task Team on the post-2015 Development Agenda compiled and published its learnings in response to issues with the MDG monitoring system. Its findings included:

"The international community should be cautious of three dangers: overloading, being either too prescriptive or too vague, and donor-centrism."

The UN System Task Team on the Post-2015 UN Development Agenda, 2012. Realizing the Future We Want for All. Available at: https://www.un.org/millenniumgoals/pdf/Post_2015_UNTTreport.pdf

UNICEF was responsible for monitoring a number of indicators for the MDGs. Its presentation at the first meeting of the IAEG in June 2015 reflected on this experience:

"Key learned lessons were: good monitoring takes time; it is important to keep things simple and use national surveys; it is important to use a transparent method based on agreed rules and to make use of a strategic advisory group, expert task forces, and country missions; and it is important to ensure that the indicators are policy relevant."

Department of Economic and Social Affairs, 2015. First Meeting of the Inter-agency and Expert Group on SDG Indicators. Available at: https://unstats.un.org/sdgs/files/First%20meeting%20IAEG-SDGs%20-%20June%202015%20-%20Meeting%20report%20-%2024%20June%202015.pdf

Despite the good intentions outlined by the UN System Task Team and UNICEF, these targets have not been achieved. In the global SDG monitoring system, a custodian agency leads on one or more indicators. [10] This means it is “responsible for compiling comparable international data series, calculating global and regional aggregates and providing them, along with the metadata, to the UNSD”. [11] As a part of this, custodian agencies often produce country-adjusted, modelled, estimated or global reporting data if there are problems with country data. [12] , [13] Custodian agencies are expected to do this transparently and countries should be consulted on the methods they use. [14] However, in many cases, agencies have failed to adopt consultative and transparent engagement policies.

"The IAEG has taken a critical position on the procedures of some agencies in terms of the lack of transparency, communication and involvement of the NSO..."

This is against a starting position where the IAEG itself has been criticised for being a closed shop: https://onlinelibrary.wiley.com/doi/10.1111/1758-5899.12630

"Several countries mentioned that they would like […] these agencies to share with them the methodologies used to make the data internationally comparable."

Kapto, S. 2019. Layers of Politics and Power Struggles in the SDG Indicators Process. Available at: https://unstats.un.org/sdgs/files/meetings/iaeg-sdgs-meeting-04/Meeting%20Report%204th%20IAEG-SDGs%20Meeting.pdf

In addition to this, national surveys do not form the bedrock of monitoring data (in 2019, only 33.2% of data series were filled with data from countries). [15] , [16] And, more often than not, expert task forces, responsible for supporting improvements to national capacity, have not fully met all of their objectives. For example, the leadership of the High-level Group for Partnership, Coordination and Capacity-Building (HLG-PCCB) has not been able to influence the consistency of engagements between custodian agencies and countries, nor has it been able to effectively build the capacities of national statistical systems (NSSs). [17]

How simple could it have been?

The Sustainable Development Solutions Network (SDSN) was established in 2012 by the then UN Secretary-General Ban Ki-moon. Its purpose was to help to implement the SDGs by mobilising global scientific and technological expertise. [18] In mid-2015, after discussions with many NSOs, SDSN recommended that the global indicator framework should consist of 100 indicators, because that was the maximum number of indicators that NSOs could be expected to report on. [19]

In March 2018, the IAEG submitted a discussion paper to the UN Statistical Commission in which guidelines on data flows and global data reporting were proposed. These included: [20]

"a) Global reporting on SDG indicators should be primarily based on data and statistics produced by NSSs."

"b) The coordinating role of NSOs in the NSSs should be encouraged and central to the reporting process…"

"i) Custodian agencies and NSSs are expected to work together towards ensuring the most transparent and efficient way of reporting SDG indicators for both National and International Statistical Systems. This means utilizing national SDG indicator reporting platforms, where available…"

"k) If adjustments and estimates of country data are made, custodian agencies are strongly recommended to undertake consultations with countries through a fully transparent mechanism…"

"l) International agencies and NSSs are strongly recommended to coordinate their data collection work and to establish effective and efficient data sharing arrangements to avoid duplication of efforts."

The following year the IAEG consolidated and summarised these proposals in its presentation to the Commission of its ‘Best Practices in Global Data Reporting’: [21]

- “Identify national statistical office (NSO) and Custodian Agency Focal Points.

- Share data collection calendar for SDG data requests.

- Provide clear and complete metadata by agencies to countries during data request and provide comprehensive metadata by countries to agencies when submitting their data.

- Use national data platforms and databases that contain sufficiently detailed information, including metadata, to allow data and metadata to be pulled directly for global SDG monitoring.

- Consult with countries on any harmonized, estimated, modelled or adjusted data through transparent mechanisms.

- Improve coordination within NSSs, among custodian agencies, and between NSSs and custodian agencies so that all involved parties are informed about data requests and are aware of who is providing the data and when and to whom the data is being provided.”

The proposals outlined by the IAEG provide a snapshot of how simple the global monitoring system could have been:

- Data for 100 indicators produced at the national level, through surveys, administrative systems, and non-traditional sources.

- Data passed on to custodian agencies to be compiled and then sent to the UNSD for inclusion in the global database.

It was, of course, never going to be that straightforward. The ensuing lack of coordination and control over the system’s frameworks and processes by international stakeholders has resulted in NSOs being placed under huge pressure (especially those in low-income countries; LICs). Furthermore, the system is marred by opaqueness and there is a general lack of trust in the data held in the global database.

Was complexity inevitable?

In all likelihood, the idea of limiting the SDGs to 100 indicators was a non-starter. Four months before the SDSN published its report, the expert group, a forerunner of the IAEG, met in New York. [22] While recognising “the enormous challenge of addressing a large number of targets”, it found itself in a quandary:

"While fully recognizing the independence of the political process and that the statistical community has no intention to enter into the discussion on the targets being agreed by the intergovernmental process, it was stressed that in the current list of goals and targets there are several inter-linkages and overlapping targets and that there could be some common indicators for targets where inter-linkages and overlapping are evident. Also, in some cases, the complexity of the target makes it very difficult to choose only one or two indicators."

UN Stats, 2015, Expert Group Meeting on the Indicator Framework for the Post-2015 Development Agenda, UNHQ, New York, 25–26 February 2015. Available at: https://unstats.un.org/unsd/statcom/doc15/BG-EGM-SDG-summary1.pdf

As the excerpt from the expert group’s report indicates, the framework was always heading for at least one indicator for each of the 168 targets from the outset. At the IAEG’s first meeting in June 2015, one of its members observed that:

"The number of indicators (one per target) was viewed as still too large and interlinkages among goals 3, 4 and 5 should be exploited to identify multi-purpose indicators."

Department of Economic and Social Affairs, 2015. First Meeting of the Inter-agency and Expert Group on the Sustainable Development Goal Indicators. Available at: https://unstats.un.org/sdgs/files/First%20meeting%20IAEG-SDGs%20-%20June%202015%20-%20Meeting%20report%20-%2024%20June%202015.pdf

"... many agencies highlighted some targets where additional indicators may be needed and even put forward some new proposals for additional indicators."

Department of Economic and Social Affairs, 2017. The Fourth Meeting of the Inter-agency and Expert Group on the Sustainable Development Goal Indicators. Available at: https://unstats.un.org/sdgs/files/meetings/iaeg-sdgs-meeting-04/Meeting%20Report%204th%20IAEG-SDGs%20Meeting.pdf

And key informants have told us that some powerful countries stood firm on indicators that they considered important. For example, the UK wanted to keep governance-related indicators. By 2017, 37 new indicators had already been tabled for formal consideration. [28] Furthermore, multi-purpose indicators were never really embraced, therefore the potential they represented to help to reduce the overall number of indicators was never realised. International agencies in the pursuit of their missions are, understandably, continually reviewing their best practices and adapting them to the latest knowledge and experience. This presents a further challenge when SDG indicators – which are meant to be consistent over a 15-year period – are revised midstream to reflect new practice.

Indicator 4.2.1 which monitors early childhood development [29] is a case in point. The original indicator methodology was based on the 2009 version of UNICEF’s Multiple Indicator Cluster Survey. [30] In 2019, with the adoption of UNICEF’s improved Early Childhood Development Index 2030, [31] the SDG methodology was altered to cover a different age range and to include 20 different questions. [32]

The IAEG was well aware of the problems this kind of change would cause when it undertook a Comprehensive Review in 2020. It laid out several guiding principles to set the parameters within which the review would take place. These included: [33]

- "The review needs to take into account investments already made at the national and international levels and should not undermine ongoing efforts."

- "The revised framework should not significantly impose an additional burden on national statistical work."

- "There should be space for improvements, while at the same time ensuring that the changes are limited in scope and the size of the framework remains the same."

Who controls global data flows?

In addition to the proliferation and revision of indicators, the problem stakeholders have had exerting control over the SDG monitoring framework also extends to issues with data flows. Countries are at the base of the data-flow model. They are the primary source of data. In 2017, the UN Statistical Commission "strongly recommended that national data be used for global reporting and that adjustments and estimates of country data be undertaken in full consultation with countries and through fully transparent mechanisms…" [34] The IAEG was requested "to develop guidelines on how custodian agencies and countries can work together to contribute to the data flows necessary to have harmonized statistics." [35]

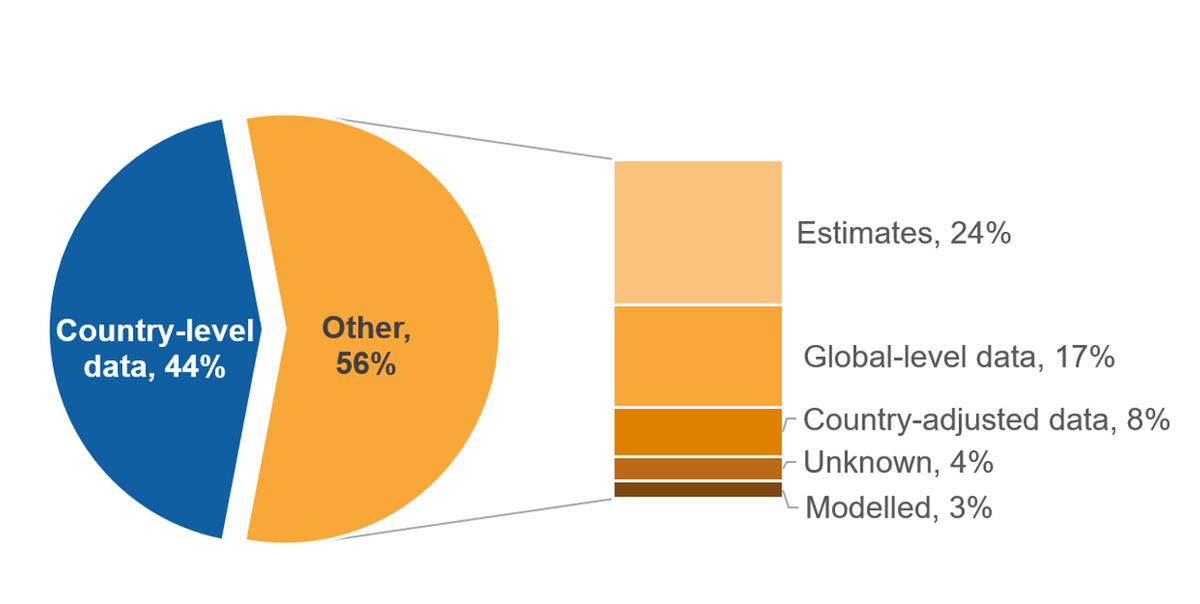

However, data that has been produced, estimated, modelled or adjusted by custodian agencies is currently more prevalent than country data in the global database. An analysis of 2019 data reveals that only 44% of the 194 indicators are being populated primarily by country data (see Figure 1).

Figure 1: Primary source of data in the SDG Global Database

Country data accounts for less than half of primary data in the SDG Global Database

Chart showing the primary source of data in the SDG Global Database

Note: The categorisations used here, known as 'data natures', denote what type of source a value is derived from (e.g., 'country data' is data sourced directly from countries, 'estimated data' is estimates made by custodian agencies, etc.). This chart extracts the most numerous ‘nature’ for each indicator reported for 2019; 2019 was chosen as the year in which most observations were recorded at the time of access in April 2022.

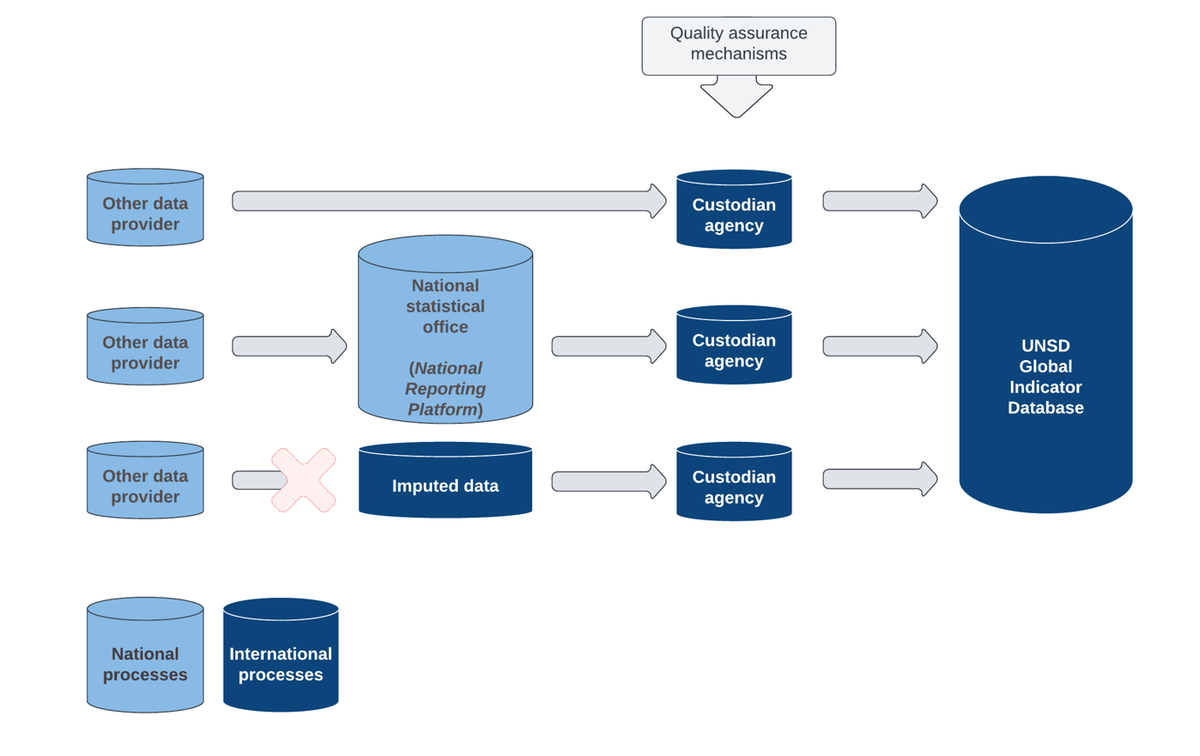

Figure 2: Planned SDG data flows

Chart showing planned SDG data flows

Source: this flow diagram is redrawn from the IAEG’s 'best practices' document, originally produced in 2017 by a UNECE task force responsible for compiling guidelines for countries to facilitate decisions about reporting on the SDGs. United Nations Economic Commission for Europe, 2017. Guidelines for national SDG-indicators reporting mechanisms.

Note: the document presents two models: centralised and decentralised. This is the centralised version for NSOs that are responsible for all official statistics. The diagram has been redrawn but accurately reflects the content of the original.

Figure 2 puts the NSO at the heart of the data ecosystem – both in terms of data flows as well as quality assurance. All national data flows through the NSO. Data estimated or modelled by custodian agencies is referred back to the NSO for validation.

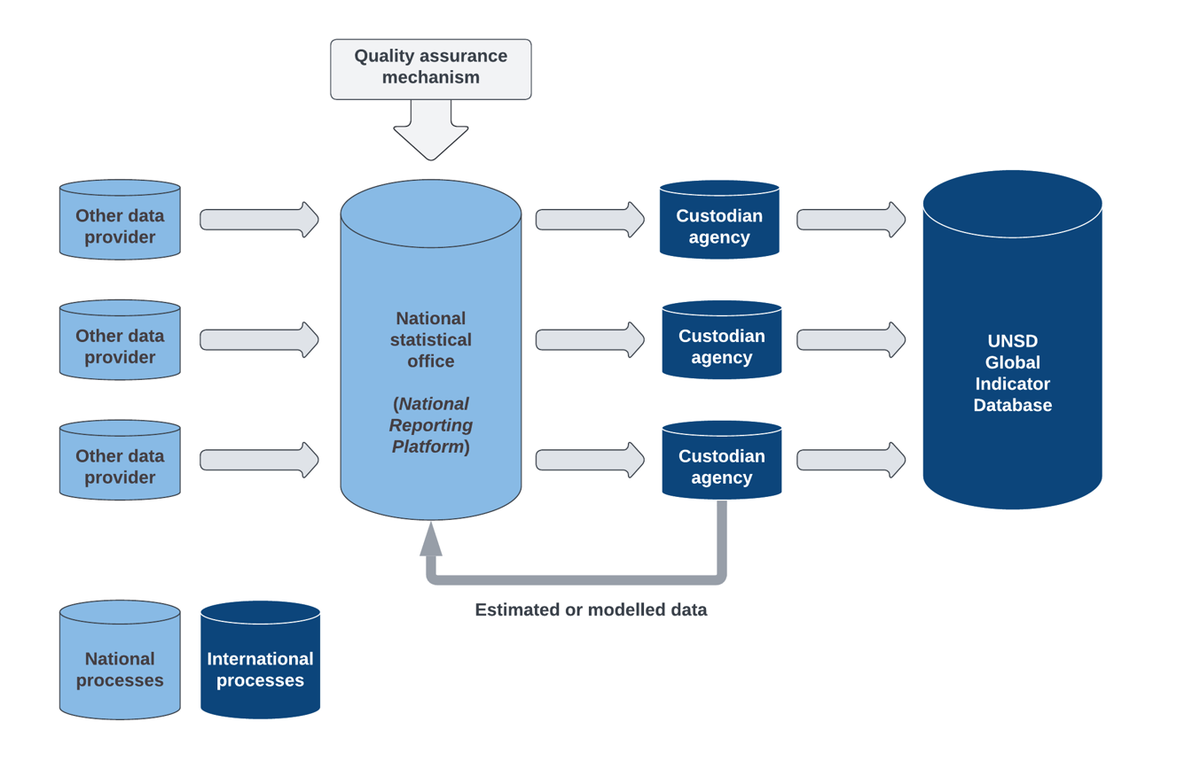

Figure 3 is DI’s modification of the original planned flow diagram ( Figure 2 ) to reflect current practice. Some data flows direct from government ministries, departments and agencies to custodians without NSO knowledge or validation. Other indicators are constructed by custodians from imputed data. There is no feedback loop for modelled or estimated data. And quality assurance rests with the custodians, not the NSO. Global custodians, not NSOs, have become the heartbeat of the SDG monitoring framework.

Are the SDGs country-led?

If one reads through the reams of documentation that the development of the SDG monitoring system has produced, one will certainly come across a mantra synonymous with the idea that SDG monitoring needs to be country-led.

For example, in June 2012 the IAEG highlighted the value of context-specific monitoring:

"Global goals and targets should not be confused with national targets. Development processes are context specific. Therefore, to be meaningful, global goals and targets must be tailored and adapted to national and regional contexts and initial conditions."

The UN System Task Team on the Post-2015 UN Development Agenda, 2012. Realizing the Future We Want for All.

In June 2015, national ownership was in clear focus:

"… the need to ensure national ownership of the global indicator framework…"

Department of Economic and Social Affairs, 2015. First Meeting of the Inter-agency and Expert Group on the Sustainable Development Goal Indicators.

In January 2016, they were concerned with defining clear roles and responsibilities:

"There was also agreement among members on the need to define roles of national statistical systems, international agencies, and other stakeholders in the collection, compilation, transmission, and aggregation of data, with a view to ensure coherence between national, regional, and global levels, while ensuring that the process is country-led and that national statistical systems retain the ownership of the indicators."

Department of Economic and Social Affairs, 2016. Third Meeting of the Inter-agency and Expert Group on the Sustainable Development Goal Indicators.

And in March 2020, the IAEG appeared to favour national prioritisation of indicator selection:

"… the application of the global indicator framework is a voluntary and country-led process and that alternative or complementary indicators for national and subnational levels of monitoring will be developed at the national level on the basis of national priorities, realities, capacities and circumstances."

UN Stats, 2020. Report on the fifty-first session of the UN Statistical Commission.

Certainly, stakeholders talk the talk, but have they walked the walk?

You will find many commentaries which assert that the influence of custodian agencies on the SDG monitoring system is limited. Primarily because the IAEG consists solely of countries and that international agencies only have observer status. [36] Yet, in reality, custodian agencies have had a lot more influence than is commonly admitted.

At the outset there were clear channels for them to effectively get their points across. They were invited to provide inputs on proposed indicators, and in cases where “multiple indicators were proposed under one target, precedence was in general given to the proposals by agencies with a mandate in the specific area and/or already responsible for global monitoring on the specific indicator.” [37] Following on from this, “starting with its fourth meeting, [the IAEG] rejigged the format of its meetings to formalise more time for participation by and interaction with UN agencies”, by instituting the system of custodians and partner agencies. [38] In effect, custodian agencies can also drop an indicator from the framework if they all refuse to lead on it. [39]

Custodian influence extends beyond their role in defining and overseeing indicator methodology. The fact that it is custodians, not countries, that submit data to the Global Database is where custodian influence really lies.

The IAEG’s ‘Best practices in Data Flows and Global Data Reporting’ background document [40] recognises that “many countries have developed or are developing national data platforms (SDG specific and more general) in order to disseminate their data more effectively to users” and that using a national data platform “helps improving accountability, quality assurance, coordination and accessibility for SDG data. It also helps strengthen the central coordination role of NSO within NSS.” [41]

Table 1 shows available Malaysian data for SDG3 (‘Good health and well-being’) between 2018 and 2020, comparing data published by Malaysia’s Department of Statistics [42] with data currently published in the UN’s global SDG database. [43] Of the 17 indicators for which Malaysia has published data only seven are replicated accurately in the Global Database. Three are not present at all and ten in the Global Database are classified [44] as estimates.

Table 1: Comparison of Malaysia’s national and global data for SDG 3

| SDG | Description | Custodian | Global nature | 2018 national | 2018 global | 2019 national | 2019 global | 2020 national | 2020 global |

|---|---|---|---|---|---|---|---|---|---|

| 3.1.1 | Maternal mortality ratio | WHO | - | 23.5 | - | 21.1 | - | 24.9 | - |

| 3.1.2 | Skilled attendance at birth [C] | UNICEF | Country | 99.6* | 99.6* | 99.6* | 99.6* | 99.6 | - |

| 3.2.1 | Under-five mortality rate | UNICEF | Estimate | 8.8** | 8.4** | 7.7** | 8.6** | 6.9** | 8.6** |

| 3.2.2 | Neonatal mortality rate | UNICEF | Estimate | 4.6** | 4.5** | 4.1** | 4.6** | 3.9** | 4.6** |

| 3.3.1 | HIV infections per 1,000 | UNAIDS | Estimate | 0.2* | 0.16* | 0.2* | 0.18* | 0.2* | 0.19* |

| 3.3.2 | TB incidence per 100,000 | WHO | Estimate | 78.6** | 92** | 80.9** | 92** | 72.6** | 92** |

| 3.3.3 | Malaria incidence per 1,000 | WHO | - | 0.1 | - | 0.1 | - | 0.1 | - |

| 3.4.1 | Mortality rates (cardio, cancer, etc.)* | WHO | Estimate | - | - | - | - | - | - |

| 3.4.2 | Suicide mortality rate per 100,000 | WHO | Estimate | 0.1 | - | 0.0** | 5.7** | 0.0 | - |

| 3.6.1 | Death rate due to road traffic injuries per 100,000 | WHO | Estimate | 18.1 | - | 17.7** | 22.5** | 13.2 | - |

| 3.7.1 | Adolescent birth rate 10– 14 | UNDESA | Country-adjusted | 0.1 | - | 0.1* | 0.1* | 0.1 | - |

| 3.7.1 | Adolescent birth rate 15 – 19 | UNDESA | Country-adjusted | 8.5 | - | 8.2** | 8.6** | 7.6 | - |

| 3.8.2 | Proportion of population with 25% spend on health | WHO | Country | - | - | 0.13* | 0.13* | - | - |

| 3.8.2 | Proportion of population with 10% spend on health | WHO | Country | - | - | 1.52* | 1.52* | - | - |

| 3.a.1 | Age-standardised prevalence of tobacco use | WHO | Estimate | - | 22.8 | 20.7** | 22.8** | - | 22.5 |

| 3.b.1 | Proportion of target population covered by vaccines (DTP) | WHO | Estimate | 110.2 | 99 | 98.4* | 98* | 97.7* | 98* |

| 3.b.1 | Proportion of target population covered by vaccines (HPV) | WHO | Estimate | 82.2* | 83* | 84.4* | 85* | 82.6** | 84** |

Note: National data comes from the Malaysia SDG portal. Global data comes from the UNSD Global Database. Both were accessed on 19 April 2022.* Indicates a match between national and global data** Indicates discrepancy between national data and the global database

This example for Malaysia is just one of many where the values stated in a country’s national portal are different to those in the Global Database. [45] Additionally, there are also gaps in the Global Database that needn't be there, because the data for a particular series in a particular year is available via the national portal. For example, values for the years 2016, 2017, 2018 and 2020 for the data series ‘Death rate due to road traffic injuries, by sex (per 100,000 population; indicator 3.6.1)’ are available via the Philippines portal, but no values are given in the Global Database. Similarly, values for the years 2016–2019 for the data series ‘Participation rate in organized learning (one year before the official primary entry age), by sex (%) (indicator 4.2.2)’ are available via Ethiopia’s portal, but no values are given in the Global Database. This is the same for at least 12 data series in relation to Uganda. [46]

Seemingly, custodians are missing the opportunity to use national data even though it is readily accessible. These systematic oversights damage the perception that the global system is doing all it can to utilise national data. Something which NSOs surely do not appreciate. There is, of course, the caveat that agencies don’t believe the data (or metadata) in the national portals is “sufficiently detailed”. [47] While this may be the case sometimes (some of the platforms we reviewed did not contain key pieces of metadata), it certainly is not the case all of the time. And, if the platforms are not used, what is the incentive to improve them?

Is this just a communication breakdown?

Curation of the SDG indicators is shared across 38 international agencies as illustrated in Table 2. This means that the governance of the framework is widely distributed and the maintenance of common standards across all custodians is challenging. While the UNSD is responsible for hosting the Global Database it is the custodians who are de facto responsible for quality assurance.

Table 2: SDG custodian agencies

| Custodian | Indicators curated | Custodian | Indicators curated |

|---|---|---|---|

| WHO | 25 | ITU | 4 |

| UNEP | 22 | UNDP | 4 |

| FAO | 21 | UNFCCC | 4 |

| UNESCO | 19 | ITC | 3 |

| World Bank | 17 | PARIS21 | 3 |

| OECD | 15 | UN Women | 3 |

| UNICEF | 14 | UNCTAD | 3 |

| ILO | 13 | UNSD | 3 |

| UNDRR | 11 | IEA | 2 |

| UNODC | 11 | IRENA | 2 |

| UN-Habitat | 10 | IUCN | 2 |

| UNIDO | 6 | UNFPA | 2 |

| OHCHR | 5 | UNWTO | 2 |

| UNDESA | 5 | Others | 13 |

| IMF | 4 |

Note: Data aggregated from UN SDG Indicator metadata repository: Data collection Information and Focal points. Available at: https://unstats.un.org/sdgs/dataContacts/

The IAEG, recognising in its best practice documentation that “communication is the key issue to understand data flows and to ensure that all parties are informed of the data being transmitted and of any harmonization that takes place” [48] maintains that “NSOs should be the coordinator of the national statistical system” and that they should be consulted on all matters pertaining to their country’s data.

"In order for custodian agencies to ensure that data are internationally comparable, values are sometimes adjusted and no longer match the figure reported at the national level. In these cases, it is essential that a detailed explanation of the process and methodologies used to adjust the data be provided to the country and that the country has the opportunity to comment on this new value."

UN Stats, 2019. Guidelines on Data Flows and Global Data Reporting for Sustainable Development Goals. Available at: https://unstats.un.org/unsd/statcom/50th-session/documents/BG-3a-Best-Practices-in-Data-Flows-and-Global-Data-Reporting-for-theSDGs-E.pdf

The IAEG has produced guidelines to regulate this process. To upload adjusted, estimated, modelled or global reporting data to the Global Database, a custodian agency must get it validated by the country. A national data provider (usually the NSO) has two weeks to respond to a request made by a custodian agency for validation. If they do not respond within the timeframe, protocol means they consent to its use. [49] Two weeks is a short turnaround, especially given the capacity constraints many NSOs face, particularly in LICs. Moreover, an UN Economic Commission for Europe (UNECE) study of 38 (mostly European countries) showed that “when country focal points were identified by custodian agencies, they were often out of date.” [50] And that in “a worst case scenario, the custodian agency believes that it is communicating effectively and mistakenly believes that the country is not responsive.” [51] Mistake or not, out-of-date contact details equals "consent" for custodians to use their own data.

"... most responding countries indicated they did not receive a request to validate the data."

United Nations Economic Commission for Europe, 2019. Results of the UNECE 2018 Pilot Study of Data Flows from Country to Custodian Agencies Responsible for SDG Indicators. Available at: https://statswiki.unece.org/display/SFSDG/Task+Team+on+Data+Flows+for+SDGs?preview=/128451079/255493363/2018%20Data%20Flows%20Pilot%20-%20Final%20Report.pdf

Similarly, in early 2019, the co-chair of the IAEG reflected that:

"The IAEG SDG has taken a critical position on the procedures of some agencies in terms of the lack of transparency, communication and involvement of the NSO in producing global, thematic and regional indicators, either through estimates, imputations, data modelling or conducting surveys in various countries."

Ordaz, E. 2019. The SDG Indicators: A Challenging Task for the International Statistical Community. Available at: https://onlinelibrary.wiley.com/doi/epdf/10.1111/1758-5899.12631

Who is responsible for capacity development?

In 2015, prior to the first meeting of the IAEG, the UN Secretariat completed an evaluation and concluded that “significant efforts will be necessary in order to… build the national statistical capacities” for the SDG monitoring system to work. [52] A similar observation had already been made in June 2012 by the UN System Task Team, [53] and was repeated consistently throughout 2016 by the IAEG and the Statistical Commission. [54] , [55] Alongside this, the High-level Group for Partnership, Coordination and Capacity-Building for post-2015 monitoring (HLG-PCCB) was established with “two key roles”:

- “… as the voice of official statistics”

- “… as the lead in effective statistical capacity building efforts to support the implementation of the 2030 Agenda.” [56]

The objective was unequivocal: countries that can’t report will be helped.

Over the last seven years DI has conducted various studies on the national data ecosystems of various countries around the world, including Zimbabwe, Uganda, Nigeria, United Arab Emirates, South Sudan, Bangladesh, Malaysia and Nepal. In most of these countries the NSS is blighted by capacity concerns. Duplication and a lack of harmonisation run rampant. The learnings from these studies can be viewed in detail in the previous DI discussion papers in this series, the Data side of leaving no one behind (2021) and Data disharmony (2022).

This continued lack of capacity can be gauged by the continued gaps between the data countries need to produce for SDG monitoring and the data that they are producing. Analysis shows that in 2015 only 34.5% of data series were filled with data from countries and that this actually fell to 33.2% in 2019. [57] In addition to this, there is a very similar situation in relation to data series with values for 60 countries and over, while the overall proportion does rise slightly, it was still only 37.9% in 2015 and 36.3% in 2019. [58] While high-income and middle-income countries have a similar level of performance, low-income countries lag behind. [59]

"Most national statistical offices cannot implement the SDG indicator framework without adequate resources. National governments and international donors should give higher priority to supporting these needs."

Sakiko, F-P. and McNeill, D., 2019. Knowledge and Politics in Settings and Measuring SDGs. Available at: https://onlinelibrary.wiley.com/doi/epdf/10.1111/1758-5899.12604

It is true that national governments should foot most of the bill needed to develop their statistical systems. For the most part, they too have fallen woefully short. The current state of play is not entirely the fault of custodian agencies.

"There is no indication from member states where the funding to support data production and statistical capacity development for the 2030 Agenda would come from."

Kapto, S. 2019. Layers of Politics and Power Struggles in the SDG Indicators Process. Available at https://onlinelibrary.wiley.com/doi/epdf/10.1111/1758-5899.12630

Furthermore, despite the IAEG viewing custodian agencies as indispensable components of the process to improve national statistical capacities, doing so is not part of their official mandates. Therefore, the IAEG is expecting custodians to do something that they have not been mandated to do. Nonetheless, these facts do not exonerate agencies that have failed to fulfil their part of the bargain, which, officially or not, they have agreed to. There is also little evidence from these agencies to suggest that a wind of change is just around the corner.

Do we have comparable data?

The Global Database is at the centre of the global SDG monitoring system. It is meant to be a one-stop-shop for policymakers, data scientists, data journalists and citizens to track the progress of countries towards meeting the SDGs. For it to be considered successful, the data it stores must be reliable, comparable, and easily understandable. [60]

Data natures are critical to understanding the data in the database. Data natures are categorisations that denote what type of source a value is derived from (e.g., country data is data sourced directly from countries, estimated data is estimates made by custodian agencies, etc.). Stakeholders have told us that the system of data natures has left some custodian agencies confused, which has led to them reporting incorrect natures.

Incorrect reporting leads to a lack of consistency in application, which in turn manifests in an array of natures being reported when it is reasonable to expect one or maybe two. For example, there are four variations of natures for the data series ‘Total population in moderate or severe food insecurity (thousands of people)’ for the 43 Sub-Saharan African countries there is data for in 2019. A closer look muddies the water even more. One of the natures given multiple times is labelled “country adjusted plus global reporting data”. The definition of the former is data “produced and provided by the country but adjusted by the international agency”; and the definition of the latter is data “produced on a regular basis by the designated agency for global monitoring, based on country data.” It is hard to tell for certain, but the difference presumably lies in the level of the custodian agency’s involvement. The definition of country-adjusted data implies a figure just needs tweaking, whereas the definition of global reporting data implies heavy handedness. Yet, these appear side by side as one conjoined nature for one value. This begs the question, what is it exactly that we’re looking at?

In addition to this, duplication undermines user trust in the Global Database. There are 37 data series where over 10% of countries have two or more values for the same series in the same year [61] ; 29 data series where over 25% of countries have two or more values for the same series in the same year [62] ; 11 data series where over 50% of countries have two or more values for the same series in the same year [63] , and three data series where 100% of countries have two or more values for the same series in the same year. [64] Which of the multiple values should users believe? Uncertainty about a) data sources and b) the reliability of the values listed erodes the credibility of data in the Global Database, and, therefore, of the database itself. It is notable that the flagship Sustainable Development Report produced by SDSN doesn’t rely on the Global Database. It doesn’t even subscribe to the official list of indicators! [65]

Should we be accountable for our commitments?

A recurring theme in this discussion paper is that custodian agencies have failed to meet various agreed commitments. The challenges we have outlined so far are lower than demanded use of country data; a lack of transparency when using estimated, modelled, adjusted or global reporting data; misreporting data natures; and the underuse of country portals.

"Most countries reported not knowing the process by which globally harmonised country data are provided and released on the UN SDGs website. In some cases, countries reported that it was not clear why national data in the global database were not fully aligned with data they provided in an intermediary database."

United Nations Economic Commission for Europe, 2018. Results from the 2017 Data Flow Pilot Study. Available at: https://statswiki.unece.org/display/SFSDG/Task+Team+on+Data+Flows+for+SDGs?preview=/128451079/255493334/2017-Data-Flow-Report.pdf

Unfortunately, this is not the end of the story. Custodian agencies’ lack of transparency also extends to collecting survey data for the SDGs in countries without the required level of involvement of NSOs. [66] It also includes a “lack of coordination across the international statistical system with the same information being requested several times by multiple organisations.” [67] A situation that countries have expressed frustration with. [68]

"Communication and coordination among international organisations should be enhanced to avoid duplicate reports, ensure consistency of data and reduce response burden on countries."

United Nations Economic Commission for Europe, 2018. Results from the 2017 Data Flow Pilot Study. Available at: https://statswiki.unece.org/display/SFSDG/Task+Team+on+Data+Flows+for+SDGs?preview=/128451079/255493334/2017-Data-Flow-Report.pdf

The IAEG classifies all SDG indicators into three tiers based on their level of methodological development and the availability of data at the global level. A tier one indicator is “conceptually clear, has an internationally established methodology and standards are available, and data are regularly produced by countries for at least 50 percent of countries and of the population in every region where the indicator is relevant.” [69] More often than not the problems with custodian agencies’ apply as much to tier one indicators as they do to tiers two and three. For example, the vast majority of the data series discussed in this report belong to tier one. The consultation process through which an indicator is classified is thorough and has been a mainstay of IAEG meetings. Misdiagnosis of tier status by the group is unlikely. This begs the question as to why it has been so difficult to report in line with the rules, even when it should be easy to do so.

There is a mixed bag of reasons why custodians have consistently fallen short of their responsibilities. It would be unfair to put all of the blame for this at their doorsteps. The IAEG wavered on its position regarding their observer status and nudged the door open for them. The group needed a way to get agencies “to devote resources to the SDG indicators process” and came to view them as essential in supporting “the development of indicators, [assisting] developing countries in data collection and [strengthening] national statistical capacity.” [70] If custodians haven’t had the capacity to provide the expected support, they have been silent in voicing this as a reason for the paucity of country data.

However, custodians have no one but themselves to blame for their overuse of non-country data when country data is available or for their lack of consultation with countries when doing so.

Conclusion: Who should own this problem?

A monitoring framework that works is an essential component of the overall SDG agenda. Without one we can't know what's been achieved and what hasn't; we can't monitor uneven progress between countries; and we are missing opportunities to better allocate international resources. Furthermore, the development of all countries' national statistical systems is a vital component of sustainable development in and of itself.

The answer to the question, “is the SDG monitoring system broken” is, at this point, “yes”. Halfway through the post-2015 agenda a transparent and simple system, based primarily on national data produced by empowered NSOs, has not been put in place. Instead, it is opaque and complicated.

There is one simple, overriding recommendation to be made to all global custodians. It is to honour the commitments already made in supporting NSOs to become the engine rooms that must drive the global monitoring framework.

It is not too late for the framework to be reformed. These reforms should be based on the guiding principle that SDG monitoring should primarily be a national exercise. This principle reflects the fact that the work to meet the SDGs is done at the national and sub-national level.

A reformed SDG monitoring system should consist of national agencies producing data for the indicators that align with their national development priorities and displaying this on their national portals. At the global level, all that would be required of UNSD is for them to develop and maintain a webpage that links to countries’ portals, and, if necessary to produce aggregate statistics for a few core indicators.

The role of the IAEG and custodians would be to maintain standards. The primary weight of responsibility on international institutions would swing back to the HLG-PCCB and its critical mandate “to promote the development of national statistical systems”.

Much has been spoken about the need for the SDGs to be country-led. This is the central pillar of our approach. In ‘ The data side of leaving no one behind’ we concluded that:

"… governments need to own their own problems and solutions. They need to own their own data on behalf of their citizens. And for this to be sustainable they need to finance it."

Development Initiatives, 2021. The data side of leaving no one behind. Available at: /resources/data-side-leaving-no-one-behind/

Our follow-up paper ‘ Data disharmony ’, which focused on the lack of donor harmonisation, echoed this:

"Developing country governments are best placed to lead the way out of this problem. By deciding to manage their own prioritisation of investments, governments are in a far stronger position than they may appreciate."

Development Initiatives, Data disharmony: How can donors better act on their commitments? /resources/data-disharmony-how-can-donors-better-act-on-their-commitments/

In this, our third contribution, our conclusion remains the same. A reformed monitoring system would provide governments with incentives to own the problems with their NSOs and NSSs tied to SDG monitoring, precisely because they would now resonate fully with their own independent developmental interests.

Downloads

Notes

-

1

UN System Task Team, 2012. Realizing the Future We Want for All. Available at: www.un.org/millenniumgoals/pdf/Post_2015_UNTTreport.pdfReturn to source text

-

2

Development Solution Network, 2015. Indicators and a Monitoring Framework for the Sustainable Development Goals. Available at: https://sustainabledevelopment.un.org/content/documents/2013150612-FINAL-SDSN-Indicator-Report1.pdfReturn to source text

-

3

UN Economic and Social Commission for Asia and the Pacific, 2023. Asia and the Pacific SDG Progress Report, 2023: Championing sustainability despite adversities. Available at: https://www.unescap.org/kp/2023/asia-and-pacific-sdg-progress-report-2023Return to source text

-

4

The Open Working Group on the SDGs was created in 2013 as a result of the UN Conference on Sustainable Development that took place in 2012. It was tasked with preparing a proposal on the SDGs which it finished in late 2014. The UN System Task Team on the post-2015 UN development agenda was also influential at the beginning. The 2030 Agenda for Sustainable Development is available at: https://sdgs.un.org/2030agenda ,Return to source text

-

5

The United Nations, no date. The Sustainable Development Agenda. Available at: https://www.un.org/sustainabledevelopment/development-agenda-retired/Return to source text

Related content

Data kitchens – putting users at the centre of development solutions

Following the 53rd session of the UN Statistical Commission, DI’s Claudia Wells unpacks what putting users at the heart of data solutions really means

Data disharmony: How can donors better act on their commitments?

This briefing highlights key challenges emerging from our work analysing national data ecosystems, and aims to promote discussion between relevant stakeholders on how best to overcome these.

The data side of leaving no one behind

In this paper we look at lessons learned from data landscaping at the national level, and examine how it can be used to inform decisions about poverty eradication and ensure no one is left behind.